Elon Musk names his AI Grok. What is Grok?

In the context of AI, grokking refers to a phenomenon where a neural network, after appearing to overfit its training data (performing well only on the training set), suddenly and dramatically improves its ability to generalize to new, unseen data. This "delayed generalization" can occur after a period where the model's performance on the training data seems to have plateaued. Essentially, the model "groks" the underlying patterns in the data, not just memorizing it, and this understanding manifests as a sudden improvement in its ability to generalize and make accurate predictions on data that it had not previously seen. (something that humans do). Grokking is like the AI equivalent of Enlightenment when it “begins to see the Light”.

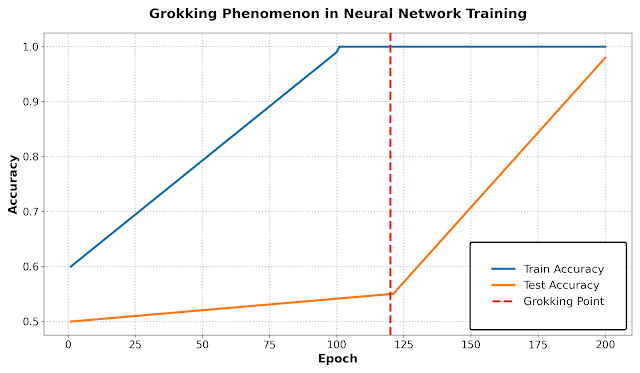

The chart above illustrates the meaning of Grokking. A Neural Network is trained on data to predict. The Blue line depicts what normally happens as each training cycle of the neural network progresses. Here we see that accuracy of prediction keeps rising but hits a plateau at around 120 training cycles.

The Orange line depicts a Grokking situation where instead of plateauing at the 120th cycle point the neural network suddenly improves its prediction accuracy in leaps and bounds.

When is a Grokking situation created? In AI textbooks, overfitting data such as by adding too many neurons to the hidden layer of a neural network will cause performance to deteriorate. Normally we do not add more than 5 neurons. But there have been many instances when Grokking happened with models that had a great number of neurons. Grokking also happens when the neural network is left to run on for a long time. This is what happened in 2020 when a member of a team from OpenAI forgot to turn off his computer when he went on vacation. When he came back, he found that the AI had grokked and was suddenly capable of performing at a whole new level. It Is as if it had understood something deeper about the problem than simply memorizing answers for the set of data it was trained on.

Grokking also happens when the model has activation neurons (like the ReLU function) that penalize complexity, pruning away unnecessary nodes, and there is sparsity in the model. And also when there is scale such as a complex model with huge data sets feeding it.

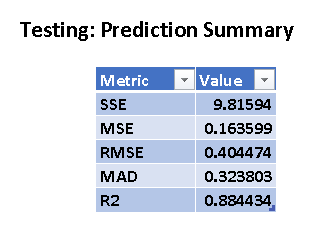

I cannot induce Grokking for lack of computing power and computing time. But my model below which had 128 neurons in a single hidden layer, and ran for 10000 cycles showed that performance did not deteriorate. In fact at this point its performance as measure by Mean Absolute Deviation was 0.32

Chart 2: My model specifications

Chart 3: My model performance run-time

My model performance summary on testing data

Comments

Post a Comment